Via @[email protected]

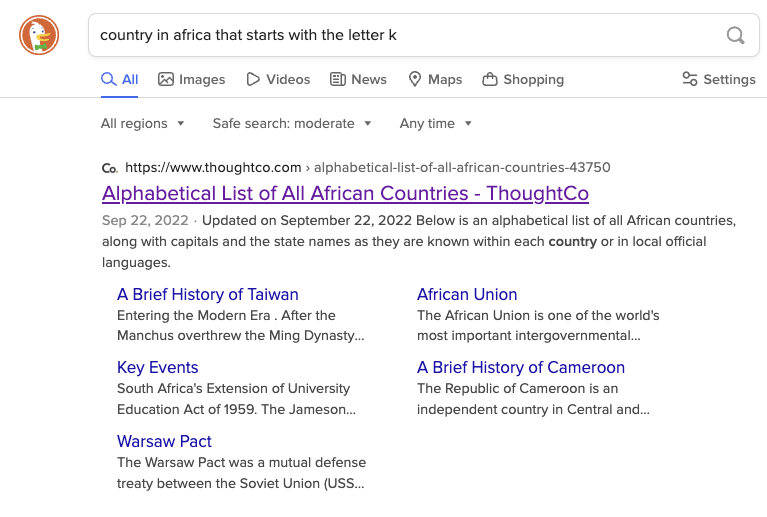

Right now if you search for “country in Africa that starts with the letter K”:

-

DuckDuckGo will link to an alphabetical list of countries in Africa which includes Kenya.

-

Google, as the first hit, links to a ChatGPT transcript where it claims that there are none, and summarizes to say the same.

This is because ChatGPT at some point ingested this popular joke:

“There are no countries in Africa that start with K.” “What about Kenya?” “Kenya suck deez nuts?”

It’s toot. With screenshots. And everyone surely knows by now that your search results are dependent on your search history. And, of course, LLM output is stochastic, not deterministic. It lies at random.

It’s probably not due to that. The effect of search history tends to be overstated and blamed for any inconsistency. They’re not making the search pageload wait on a live chatgpt call, the card is driven by contents of the linked website.

Differences usually are either intentional A/B Testing or artifacts of Google’s global architecture. You hit Google twice you’re talking to different servers potentially with different versions of software. Companies take advantage of that to see how user behavior compares between versions as a form of testing. Additionally, if you and someone in a different continent hit Google, you’re not even using the same data center. Different databases/caches in those data centers will have different data at any one time but they’ll eventually become consistent. That causes results to change both person to person and over time.