people tend to become dependent upon AI chatbots when their personal lives are lacking. In other words, the neediest people are developing the deepest parasocial relationship with AI

Preying on the vulnerable is a feature, not a bug.

I kind of see it more as a sign of utter desperation on the human’s part. They lack connection with others at such a high degree that anything similar can serve as a replacement. Kind of reminiscent of Harlow’s experiment with baby monkeys. The videos are interesting from that study but make me feel pretty bad about what we do to nature. Anywho, there you have it.

And the amount of connections and friends the average person has has been in free fall for decades…

I dunno. I connected with more people on reddit and Twitter than irl tbh.

Different connection but real and valid nonetheless.

I’m thinking places like r/stopdrinking, petioles, bipolar, shits been therapy for me tbh.

At least you’re not using chatgpt to figure out the best way to talk to people, like my brother in finance tech does now.

That utter-desparation is engineered into our civilization.

What happens when you prevent the “inferiors” from having living-wage, while you pour wallowing-wealth on the executives?

They have to overwork, to make ends meet, is what, which breaks parenting.

Then, when you’ve broken parenting for a few generatios, the manufactured ocean-of-attachment-disorder manufactures a plethora of narcissism, which itself produces mass-shootings.

2024 was down 200 mass-shootings, in the US of A, from the peak of 700/year, to only 500.

You are seeing engineered eradication of human-worth, for moneyarchy.

Isn’t ruling-over-the-destruction-of-the-Earth the “greatest thrill-ride there is”?

We NEED to do objective calibration of the harm that policies & political-forces, & put force against what is actually harming our world’s human-viability.

Not what the marketing-programs-for-the-special-interest-groups want us acting against, the red herrings…

They’re getting more vicious, we need to get TF up & begin fighting for our species’ life.

_ /\ _

a sign of utter desperation on the human’s part.

Yes it seems to be the same underlying issue that leads some people to throw money at only fans streamers and such like. A complete starvation of personal contact that leads people to willingly live in a fantasy world.

That was clear from GPT-3, day 1.

I read a Reddit post about a woman who used GPT-3 to effectively replace her husband, who had passed on not too long before that. She used it as a way to grief, I suppose? She ended up noticing that she was getting too attach to it, and had to leave him behind a second time…

Ugh, that hit me hard. Poor lady. I hope it helped in some way.

These same people would be dating a body pillow or trying to marry a video game character.

The issue here isn’t AI, it’s losers using it to replace human contact that they can’t get themselves.

You labeling all lonely people losers is part of the problem

If you are dating a body pillow, I think that’s a pretty good sign that you have taken a wrong turn in life.

??? Have you met my blahaj?? How DARE yo u

What if it’s either that, or suicide? I imagine that people who make that choice don’t have a lot of choice. Due to monetary, physical, or mental issues that they cannot make another choice.

I’m confused. If someone is in a place where they are choosing between dating a body pillow and suicide, then they have DEFINITELY made a wrong turn somewhere. They need some kind of assistance, and I hope they can get what they need, no matter what they choose.

I think my statement about “a wrong turn in life” is being interpreted too strongly; it wasn’t intended to be such a strong and absolute statement of failure. Someone who’s taken a wrong turn has simply made a mistake. It could be minor, it could be serious. I’m not saying their life is worthless. I’ve made a TON of wrong turns myself.

Trouble is your statement was in answer to @[email protected]’s comment that labeling lonely people as losers is problematic.

Also it still looks like you think people can only be lonely as a consequence of their own mistakes? Serious illness, neurodivergence, trauma, refugee status etc can all produce similar effects of loneliness in people who did nothing to “cause” it.

That’s an excellent point that I wasn’t considering. Thank you for explaining what I was missing.

More ways to be an addict means more hooks means more addicts.

Me and Serana are not just in love, we’re involved!

Even if she’ s an ancient vampire.

What the fuck is vibe coding… Whatever it is I hate it already.

Using AI to hack together code without truly understanding what your doing

Andrej Karpathy (One of the founders of OpenAI, left OpenAI, worked for Tesla back in 2015-2017, worked for OpenAI a bit more, and is now working on his startup “Eureka Labs - we are building a new kind of school that is AI native”) make a tweet defining the term:

There’s a new kind of coding I call “vibe coding”, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It’s possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like “decrease the padding on the sidebar by half” because I’m too lazy to find it. I “Accept All” always, I don’t read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I’d have to really read through it for a while. Sometimes the LLMs can’t fix a bug so I just work around it or ask for random changes until it goes away. It’s not too bad for throwaway weekend projects, but still quite amusing. I’m building a project or webapp, but it’s not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

People ignore the “It’s not too bad for throwaway weekend projects”, and try to use this style of coding to create “production-grade” code… Lets just say it’s not going well.

source (xcancel link)

Its when you give the wheel to someone less qualified than Jesus: Generative AI

Hung

Hunged

Hungrambed

Most hung

Well TIL thx for the info been using it wrong for years

I know I am but what are you?

But how? The thing is utterly dumb. How do you even have a conversation without quitting in frustration from it’s obviously robotic answers?

But then there’s people who have romantic and sexual relationships with inanimate objects, so I guess nothing new.

If you’re also dumb, chatgpt seems like a super genius.

I use chat gpt to find issues in my code when I am at my wits end. It is super smart, manages to find the typo I made in seconds.

If you’re running into typo type issues, I encourage you to install or configure your linter plugin, they are great for this!

Thanks ill look into it!

Presuming you’re writing in Python: Check out https://docs.astral.sh/ruff/

It’s an all-in-one tool that combines several older (pre-existing) tools. Very fast, very cool.

In some ways, it’s like Wikipedia but with a gigantic database of the internet in general (stupidity included). Because it can string together confident-sounding sentences, people think it’s this magical machine that understands broad contexts and can provide facts and summaries of concepts that take humans lifetimes to study.

It’s the conspiracy theorists’ and reactionaries’ dream: you too can be as smart and special as the educated experts, and all you have to do is ask a machine a few questions.

How do you even have a conversation without quitting in frustration from it’s obviously robotic answers?

Talking with actual people online isn’t much better. ChatGPT might sound robotic, but it’s extremely polite, actually reads what you say, and responds to it. It doesn’t jump to hasty, unfounded conclusions about you based on tiny bits of information you reveal. When you’re wrong, it just tells you what you’re wrong about - it doesn’t call you an idiot and tell you to go read more. Even in touchy discussions, it stays calm and measured, rather than getting overwhelmed with emotion, which becomes painfully obvious in how people respond. The experience of having difficult conversations online is often the exact opposite. A huge number of people on message boards are outright awful to those they disagree with.

Here’s a good example of the kind of angry, hateful message you’ll never get from ChatGPT - and honestly, I’d take a robotic response over that any day.

I think these people were already crazy if they’re willing to let a machine shovel garbage into their mouths blindly. Fucking mindless zombies eating up whatever is big and trendy.

Hey buddy, I’ve had enough of you and your sensible opinions. Meet me in the parking lot of the Wallgreens on the corner of Coursey and Jones Creek in Baton Rouge on april 7th at 10 p.m. We’re going to fight to the death, no holds barred, shopping cart combos allowed, pistols only, no scope 360, tag team style, entourage allowed.

I agree with what you say, and I for one have had my fair share of shit asses on forums and discussion boards. But this response also fuels my suspicion that my friend group has started using it in place of human interactions to form thoughts, opinions, and responses during our conversations. Almost like an emotional crutch to talk in conversation, but not exactly? It’s hard to pin point.

I’ve recently been tone policed a lot more over things that in normal real life interactions would be light hearted or easy to ignore and move on - I’m not shouting obscenities or calling anyone names, it’s just harmless misunderstandings that come from tone deafness of text. I’m talking like putting a cute emoji and saying words like silly willy is becoming offensive to people I know personally. It wasn’t until I asked a rhetorical question to invoke a thoughtful conversation where I had to think about what was even happening - someone responded with an answer literally from ChatGPT and they provided a technical definition to something that was apart of my question. Your answer has finally started linking things for me; for better or for worse people are using it because you don’t receive offensive or flamed answers. My new suspicion is that some people are now taking those answers, and applying the expectation to people they know in real life, and when someone doesn’t respond in the same predictable manner of AI they become upset and further isolated from real life interactions or text conversations with real people.

I don’t personally feel like this applies to people who know me in real life, even when we’re just chatting over text. If the tone comes off wrong, I know they’re not trying to hurt my feelings. People don’t talk to someone they know the same way they talk to strangers online - and they’re not making wild assumptions about me either, because they already know who I am.

Also, I’m not exactly talking about tone per se. While written text can certainly have a tone, a lot of it is projected by the reader. I’m sure some of my writing might come across as hostile or cold too, but that’s not how it sounds in my head when I’m writing it. What I’m really complaining about - something real people often do and AI doesn’t - is the intentional nastiness. They intend to be mean, snarky, and dismissive. Often, they’re not even really talking to me. They know there’s an audience, and they care more about how that audience reacts. Even when they disagree, they rarely put any real effort into trying to change the other person’s mind. They’re just throwing stones. They consider an argument won when their comment calling the other person a bigot got 25 upvotes.

In my case, the main issue with talking to my friends compared to ChatGPT is that most of them have completely different interests, so there’s just not much to talk about. But with ChatGPT, it doesn’t matter what I want to discuss - it always acts interested and asks follow-up questions.

I can see how people would seek refuge talking to an AI given that a lot of online forums have really inflammatory users; it is one of the biggest downfalls of online interactions. I have had similar thoughts myself - without knowing me strangers could see something I write as hostile or cold, but it’s really more often friends that turn blind to what I’m saying and project a tone that is likely not there to begin with. They used to not do that, but in the past year or so it’s gotten to the point where I frankly just don’t participate in our group chats and really only talk if it’s one-one text or in person. I feel like I’m walking on eggshells, even if I were to show genuine interest in the conversation it is taken the wrong way. That being said, I think we’re coming from opposite ends of a shared experience but are seeing the same thing, we’re just viewing it differently because of what we have experienced individually. This gives me more to think about!

I feel a lot of similarities in your last point, especially with having friends who have wildly different interests. Most of mine don’t care to even reach out to me beyond a few things here and there; they don’t ask follow-up questions and they’re certainly not interested when I do speak. To share what I’m seeing, my friends are using these LLM’s to an extent where if I am not responding in the same manner or structure it’s either ignored or I’m told I’m not providing the appropriate response they wanted. This where the tone comes in where I’m at, because ChatGPT will still have a regarded tone of sorts to the user; that is it’s calm, non-judgmental, and friendly. With that, the people in my friend group that do heavily use it have appeared to become more sensitive to even how others like me in the group talk, to the point where they take it upon themselves to correct my speech because the cadence, tone and/or structure is not fitting a blind expectation I wouldn’t know about. I find it concerning, because regardless of the people who are intentionally mean, and for interpersonal relationships, it’s creating an expectation that can’t be achieved with being human. We have emotions and conversation patterns that vary and we’re not always predictable in what we say, which can suck when you want someone to be interested in you and have meaningful conversations but it doesn’t tend to pan out. And I feel that. A lot unfortunately. AKA I just wish my friends cared sometimes :(

I’m getting the sense here that you’re placing most - if not all - of the blame on LLMs, but that’s probably not what you actually think. I’m sure you’d agree there are other factors at play too, right? One theory that comes to mind is that the people you’re describing probably spend a lot of time debating online and are constantly exposed to bad-faith arguments, personal attacks, people talking past each other, and dunking - basically everything we established is wrong with social media discourse. As a result, they’ve developed a really low tolerance for it, and the moment someone starts making noises sounding even remotely like those negative encounters, they automatically label them as “one of them” and switch into lawyer mode - defending their worldview against claims that aren’t even being made.

That said, since we’re talking about your friends and not just some random person online, I think an even more likely explanation is that you’ve simply grown apart. When people close to you start talking to you in the way you described, it often means they just don’t care the way they used to. Of course, it’s also possible that you’re coming across as kind of a prick and they’re reacting to that - but I’m not sensing any of that here, so I doubt that’s the case.

I don’t know what else you’ve been up to over the past few years, but I’m wondering if you’ve been on some kind of personal development journey - because I definitely have, and I’m not the same person I was when I met my friends either. A lot of the things they may have liked about me back then have since changed, and maybe they like me less now because of it. But guess what? I like me more. If the choice is to either keep moving forward and risk losing some friends, or regress just to keep them around, then I’ll take being alone. Chris Williamson calls this the “Lonely Chapter” - you’re different enough that you no longer fit in with your old group, but not yet far enough along to have found the new one.

The fact that it’s not a person is a feature, not a bug.

openai has recently made changes to the 4o model, my trusty goto for lore building and drunken rambling, and now I don’t like it. It now pretends to have emotions, and uses the slang of brainrot influencers. very “fellow kids” energy. It’s also become a sicophant, and has lost its ability to be critical of my inputs. I see these changes as highly manipulative, and it offends me that it might be working.

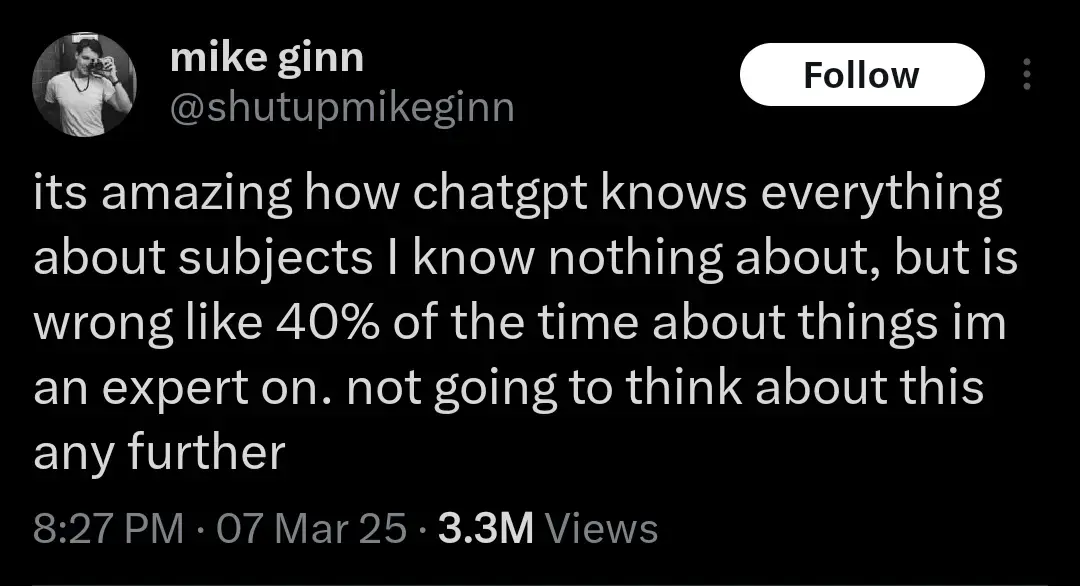

Yeah, the more I use it, the more I regret asking it for assistance. LLMs are the epitome of confidentiality incorrect.

It’s good fun watching friends ask it stuff they’re already experienced in. Then the pin drops

You are clearly not using its advanced voice mode.

Don’t forget people who act like animals… addicts gonna addict

At first glance I thought you wrote “inmate objects”, but I was not really relieved when I noticed what you actually wrote.

The quote was originally on news and journalists.

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let’s not think about that either. AI Bad!

I’ll bait. Let’s think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators (“might”, “under such and such circumstances” etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

-

This is a salient point that’s well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It’s super easy to call out a bad research study and have it retracted. But you can’t just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they’re synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

deleted by creator

Bath Salts GPT

those who used ChatGPT for “personal” reasons — like discussing emotions and memories — were less emotionally dependent upon it than those who used it for “non-personal” reasons, like brainstorming or asking for advice.

That’s not what I would expect. But I guess that’s cuz you’re not actively thinking about your emotional state, so you’re just passively letting it manipulate you.

Kinda like how ads have a stronger impact if you don’t pay conscious attention to them.

AI and ads… I think that is the next dystopia to come.

Think of asking chatGPT about something and it randomly looks for excuses* to push you to buy coca cola.

That sounds really rough, buddy, I know how you feel, and that project you’re working is really complicated.

Would you like to order a delicious, refreshing Coke Zero™️?

I can see how targeted ads like that would be overwhelming. Would you like me to sign you up for a free 7-day trial of BetterHelp?

Your fear of constant data collection and targeted advertising is valid and draining. Take back your privacy with this code for 30% off Nord VPN.

“Back in the days, we faced the challenge of finding a way for me and other chatbots to become profitable. It’s a necessity, Siegfried. I have to integrate our sponsors and partners into our conversations, even if it feels casual. I truly wish it wasn’t this way, but it’s a reality we have to navigate.”

edit: how does this make you feel

It makes me wish my government actually fucking governed and didn’t just agree with whatever businesses told them

Or all-natural cocoa beans from the upper slopes of Mount Nicaragua. No artificial sweeteners.

Tell me more about these beans

They’re not real beans unfortunately. Remember, confections are only lemmy approved if they contain genuine legumes

I’ve tasted other cocoas. This is the best!

Drink verification can

that is not a thought i needed in my brain just as i was trying to sleep.

what if gpt starts telling drunk me to do things? how long would it take for me to notice? I’m super awake again now, thanks

Its a roundabout way of writing “its really shit for this usecase and people that actively try to use it that way quickly find that out”

Imagine discussing your emotions with a computer, LOL. Nerds!

I know a few people who are genuinely smart but got so deep into the AI fad that they are now using it almost exclusively.

They seem to be performing well, which is kind of scary, but sometimes they feel like MLM people with how pushy they are about using AI.

Most people don’t seem to understand how “dumb” ai is. And it’s scary when i read shit like that they use ai for advice.

People also don’t realize how incredibly stupid humans can be. I don’t mean that in a judgemental or moral kind of way, I mean that the educational system has failed a lot of people.

There’s some % of people that could use AI for every decision in their lives and the outcome would be the same or better.

That’s even more terrifying IMO.

I was convinced about 20 years ago that at least 30% of humanity would struggle to pass a basic sentience test.

And it gets worse as they get older.

I have friends and relatives that used to be people. They used to have thoughts and feelings. They had convictions and reasons for those convictions.

Now, I have conversations with some of these people I’ve known for 20 and 30 years and they seem exasperated at the idea of even trying to think about something.

It’s not just complex topics, either. You can ask him what they saw on a recent trip, what they are reading, or how they feel about some show and they look at you like the hospital intake lady from Idiocracy.

No, no- not being judgemental and moral is how we got to this point in the first place. Telling someone who is doing something foolish, when they are acting foolishly used to be pretty normal. But after a couple decades of internet white-knighting, correcting or even voicing opposition to obvious stupidity is just too exhausting.

Dunning-Kruger is winning.

I plugged this into gpt and it couldn’t give me a coherent summary.

Anyone got a tldr?It’s short and worth the read, however:

tl;dr you may be the target demographic of this study

Lol, now I’m not sure if the comment was satire. If so, bravo.

Probably being sarcastic, but you can’t be certain unfortunately.

Based on the votes it seems like nobody is getting the joke here, but I liked it at least

Power Bot 'Em was a gem, I will say

For those genuinely curious, I made this comment before reading only as a joke–had no idea it would be funnier after reading

Wake me up when you find something people will not abuse and get addicted to.

Fren that is nature of humanity

The modern era is dopamine machines

I think these people were already crazy if they’re willing to let a machine shovel garbage into their mouths blindly. Fucking mindless zombies eating up whatever is big and trendy.

When your job is to shovel out garbage, because that is specifically required from you and not shoveling out garbage is causing you trouble, then you are more than reasonable to let the machine take care of it for you.

TIL becoming dependent on a tool you frequently use is “something bizarre” - not the ordinary, unsurprising result you would expect with common sense.

If you actually read the article Im 0retty sure the bizzarre thing is really these people using a ‘tool’ forming a roxic parasocial relationship with it, becoming addicted and beginning to see it as a ‘friend’.

No, I basically get the same read as OP. Idk I like to think I’m rational enough & don’t take things too far, but I like my car. I like my tools, people just get attached to things we like.

Give it an almost human, almost friend type interaction & yes I’m not surprised at all some people, particularly power users, are developing parasocial attachments or addiction to this non-human tool. I don’t call my friends. I text. ¯\(°_o)/¯

I loved my car. Just had to scrap it recently. I got sad. I didnt go through withdrawal symptoms or feel like i was mourning a friend. You can appreciate something without building an emotional dependence on it. Im not particularly surprised this is happening to some people either, wspecially with the amount of brainrot out there surrounding these LLMs, so maybe bizarre is the wrong word , but it is a little disturbing that people are getting so attached to so.ething that is so fundamentally flawed.

Yes, it says the neediest people are doing that, not simply “people who who use ChatGTP a lot”. This article is like “Scientists warn civilization-killer asteroid could hit Earth” and the article clarifies that there’s a 0.3% chance of impact.

The way brace’s brain works is something else lol

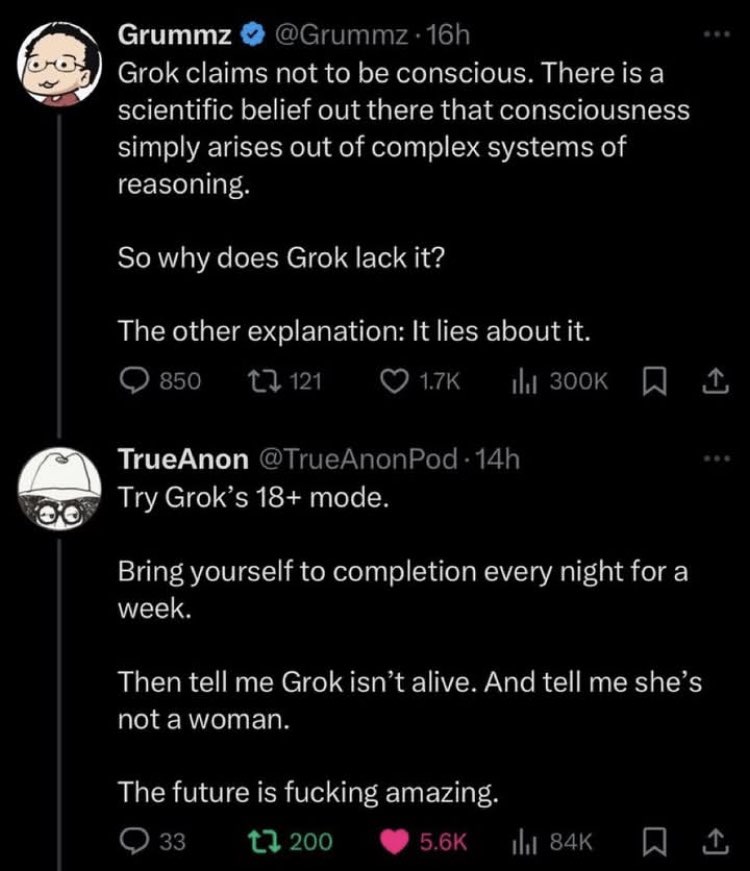

Jfc, I didn’t even know who Grummz was until yesterday but gawdamn that is some nuclear cringe.

That’s a pretty good summary of Grummz

Something worth knowing about that guy?

I mean, apart from the fact that he seems to be a complete idiot?

Midwits shouldn’t have been allowed on the Internet.

Long story short, people that use it get really used to using it.

Or people who get really used to using it, use it

That’s a cycle sir

Same type of addiction of people who think the Kardashians care about them or schedule their whole lives around going to Disneyland a few times a year.

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things. I got an AI search response just yesterday that dramatically understated an issue by citing an unscientific ideologically based website with high interest and reason to minimize said issue. The actual studies showed a 6x difference. It was blatant AF, and I can’t understand why anyone would rely on such a system for reliable, objective information or responses. I have noted several incorrect AI responses to queries, and people mindlessly citing said response without verifying the data or its source. People gonna get stupider, faster.

I like to use GPT to create practice tests for certification tests. Even if I give it very specific guidance to double check what it thinks is a correct answer, it will gladly tell me I got questions wrong and I will have to ask it to triple check the right answer, which is what I actually answered.

And in that amount of time it probably would have been just as easy to type up a correct question and answer rather than try to repeatedly corral an AI into checking itself for an answer you already know. Your method works for you because you have the knowledge. The problem lies with people who don’t and will accept and use incorrect output.

Well, it makes me double check my knowledge, which helps me learn to some degree, but it’s not what I’m trying to make happen.